Artificial Intelligence agents have revolutionized how software developers build intelligent applications. These AI agent frameworks provide the infrastructure, tools, and methodologies needed to create autonomous systems that can reason, plan, and execute complex tasks with minimal human intervention.

In 2025, AI agents have evolved from simple chatbots to sophisticated systems capable of multi-step reasoning, tool usage, and collaborative problem-solving. For developers looking to harness this technology, choosing the right framework is crucial for success.

This comprehensive guide explores the 11 best AI agent frameworks available today, comparing their features, strengths, weaknesses, and ideal use cases to help you make an informed decision for your next project.

AI agent frameworks are software platforms that enable developers to build autonomous AI systems capable of:

These frameworks typically leverage Large Language Models (LLMs) as their cognitive engine, combined with specialized components for memory, tool usage, planning, and execution.

LangChain is an open-source framework that has established itself as one of the most popular choices for building AI-powered applications. It connects language models with various tools, APIs, and external data sources to create powerful AI agents. LangChain's most beloved feature is its ability to seamlessly chain together multiple large language model (LLM) calls and integrate them with external data sources, tools, and APIs. This modular and composable approach allows developers to build complex, multi-step AI applications - such as chatbots, agents, and retrieval-augmented generation (RAG) systems - with much greater flexibility and ease than working directly with raw LLM APIs.

```python

from langchain.agents import Tool, AgentExecutor, create_react_agent

from langchain.tools.ddg_search import DuckDuckGoSearchRun

from langchain_openai import ChatOpenAI

# Define tools the agent can use

search_tool = DuckDuckGoSearchRun()

tools = [

Tool(

name="Search",

func=search_tool.run,

description="Useful for searching the internet for current information"

)

]

# Initialize the language model

llm = ChatOpenAI(model="gpt-4")

# Create the agent with the React framework

agent = create_react_agent(llm, tools, "You are a helpful AI assistant.")

# Create an agent executor

agent_executor = AgentExecutor(agent=agent, tools=tools, verbose=True)

# Run the agent

response = agent_executor.invoke({"input": "What are the latest developments in AI agent frameworks?"})

print(response["output"])

```

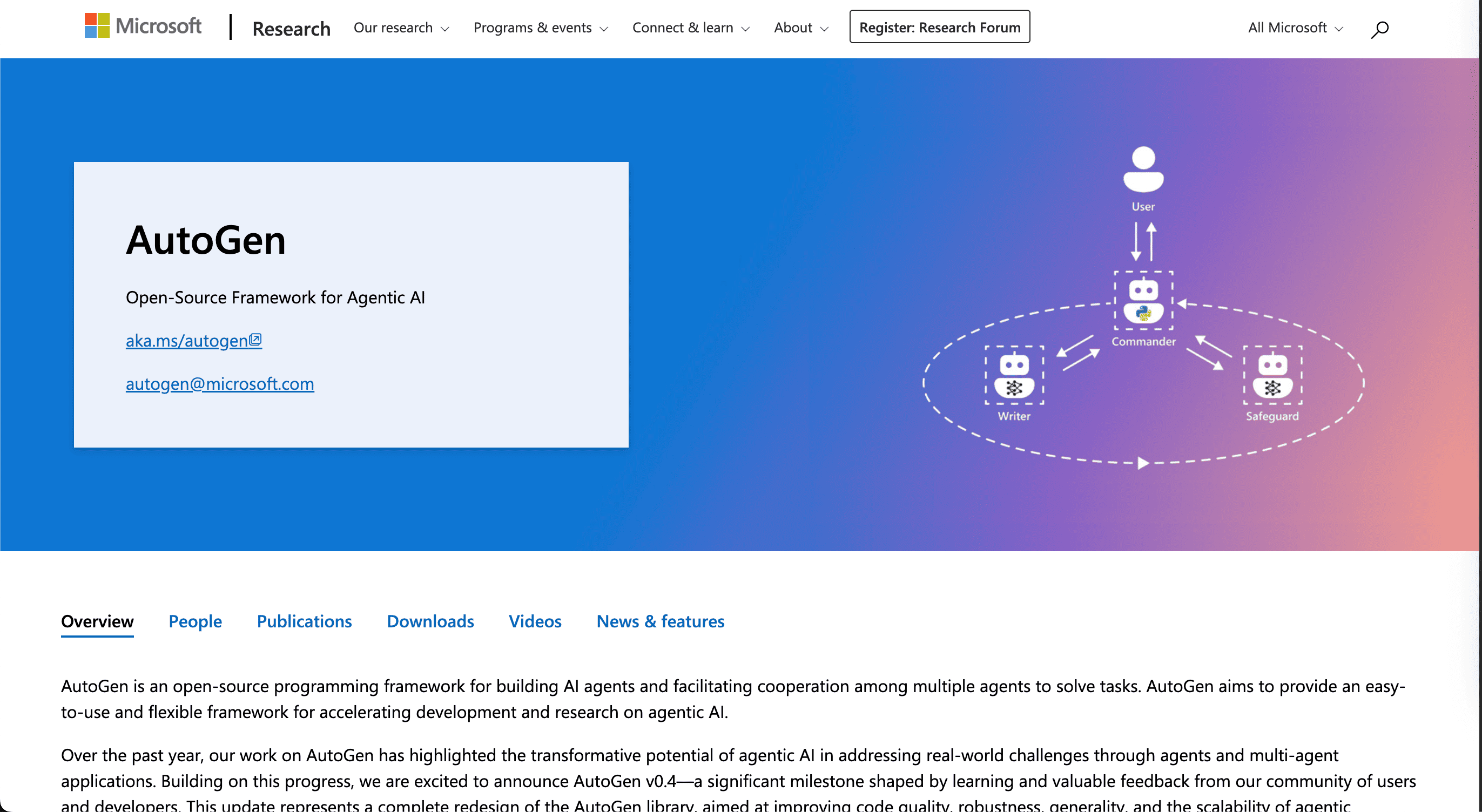

AutoGen is an open-source programming framework developed by Microsoft Research, designed for building and managing AI agents with advanced collaboration capabilities.

AutoGen's actor-based architecture and focus on agent collaboration are frequently cited as transformative, enabling new classes of AI-powered solutions in domains like business process automation, finance, healthcare, and more. This orchestration of specialized, conversable, and customizable agents is widely recognized as the feature that users appreciate most, as it makes building sophisticated, scalable, and reliable AI applications much more accessible.

```python

import autogen

# Define LLM configuration

llm_config = {

"config_list": [{"model": "gpt-4", "api_key": "your-api-key"}]

}

# Create an AssistantAgent

assistant = autogen.AssistantAgent(

name="assistant",

llm_config=llm_config,

system_message="You are a helpful AI assistant."

)

# Create a UserProxyAgent

user_proxy = autogen.UserProxyAgent(

name="user_proxy",

human_input_mode="TERMINATE", # Auto-reply with TERMINATE when the task is done

max_consecutive_auto_reply=10,

is_termination_msg=lambda x: x.get("content", "").rstrip().endswith("TERMINATE"),

code_execution_config={"work_dir": "coding"}

)

# Initiate chat between agents

user_proxy.initiate_chat(

assistant,

message="Write a Python function to calculate the Fibonacci sequence."

)

```

.png)

CrewAI is an open-source multi-agent orchestration framework built in Python, designed for building collaborative AI agent systems that work together like a real team.

```python

from crewai import Agent, Task, Crew

from langchain_openai import ChatOpenAI

# Initialize the language model

llm = ChatOpenAI(model="gpt-4")

# Define agents with specific roles

researcher = Agent(

role="Research Analyst",

goal="Discover and analyze the latest trends in AI technology",

backstory="You are an expert in AI research with a keen eye for emerging trends",

verbose=True,

llm=llm

)

writer = Agent(

role="Technical Writer",

goal="Create comprehensive reports based on research findings",

backstory="You are a skilled technical writer who can explain complex concepts clearly",

verbose=True,

llm=llm

)

# Define tasks for each agent

research_task = Task(

description="Research the latest developments in AI agent frameworks",

expected_output="A comprehensive analysis of current AI agent frameworks",

agent=researcher

)

writing_task = Task(

description="Write a detailed report on AI agent frameworks based on the research",

expected_output="A well-structured report on AI agent frameworks",

agent=writer,

context=[research_task] # The writing task depends on the research task

)

# Create a crew with the agents and tasks

crew = Crew(

agents=[researcher, writer],

tasks=[research_task, writing_task],

verbose=True

)

# Execute the crew's tasks

result = crew.kickoff()

print(result)

```

Semantic Kernel is an open-source development kit for building AI agents that supports multiple programming languages and enables integration of AI models and services.

```python

import semantic_kernel as sk

from semantic_kernel.connectors.ai.open_ai import OpenAIChatCompletion

# Initialize the kernel

kernel = sk.Kernel()

# Add OpenAI service

api_key = "your-api-key"

model = "gpt-4"

kernel.add_chat_service("chat_completion", OpenAIChatCompletion(model, api_key))

# Create a semantic function using natural language

prompt = """

Generate a creative story about {{$input}}.

The story should be engaging and approximately 100 words.

"""

# Register the function in the kernel

story_function = kernel.create_semantic_function(prompt, max_tokens=500)

# Execute the function

result = story_function("a robot learning to paint")

print(result)

# Create a simple agent using Semantic Kernel

from semantic_kernel.planning import ActionPlanner

# Define the planner

planner = ActionPlanner(kernel)

# Execute a plan

plan = await planner.create_plan("Write a poem about artificial intelligence")

result = await plan.invoke()

print(result)

```

LangGraph is an open-source AI agent framework created by LangChain for building and managing complex generative AI workflows.

```python

from typing import TypedDict, Annotated, Sequence

from langgraph.graph import StateGraph, END

from langchain_openai import ChatOpenAI

from langchain_core.messages import HumanMessage, AIMessage

# Define the state structure

class AgentState(TypedDict):

messages: Annotated[Sequence[HumanMessage | AIMessage], "The messages in the conversation"]

next_step: Annotated[str, "The next step to take"]

# Initialize the language model

llm = ChatOpenAI(model="gpt-4")

# Define the nodes (steps in the workflow)

def research(state: AgentState) -> AgentState:

messages = state["messages"]

response = llm.invoke(messages + [HumanMessage(content="Research this topic thoroughly.")])

return {"messages": state["messages"] + [response], "next_step": "analyze"}

def analyze(state: AgentState) -> AgentState:

messages = state["messages"]

response = llm.invoke(messages + [HumanMessage(content="Analyze the research findings.")])

return {"messages": state["messages"] + [response], "next_step": "conclude"}

def conclude(state: AgentState) -> AgentState:

messages = state["messages"]

response = llm.invoke(messages + [HumanMessage(content="Provide a conclusion based on the analysis.")])

return {"messages": state["messages"] + [response], "next_step": "end"}

# Create the graph

workflow = StateGraph(AgentState)

# Add nodes

workflow.add_node("research", research)

workflow.add_node("analyze", analyze)

workflow.add_node("conclude", conclude)

# Add edges

workflow.add_edge("research", "analyze")

workflow.add_edge("analyze", "conclude")

workflow.add_edge("conclude", END)

# Set the entry point

workflow.set_entry_point("research")

# Compile the graph

agent = workflow.compile()

# Execute the workflow

result = agent.invoke({

"messages": [HumanMessage(content="Tell me about AI agent frameworks")],

"next_step": "research"

})

# Print the final messages

for message in result["messages"]:

print(f"{message.type}: {message.content}\n")

```

LlamaIndex is a flexible, open-source data orchestration framework that specializes in integrating private and public data for LLM applications.

```python

from llama_index.core.agent import FunctionCallingAgentWorker

from llama_index.core.tools import FunctionTool

from llama_index.llms.openai import OpenAI

# Define a simple tool function

def search_documents(query: str) -> str:

"""Search for information in the document database."""

# In a real application, this would query a document store

return f"Here are the search results for: {query}"

# Create a function tool

search_tool = FunctionTool.from_defaults(

name="search_documents",

fn=search_documents,

description="Search for information in the document database"

)

# Initialize the language model

llm = OpenAI(model="gpt-4")

# Create the agent

agent = FunctionCallingAgentWorker.from_tools(

[search_tool],

llm=llm,

verbose=True

)

# Run the agent

response = agent.chat("Find information about AI agent frameworks")

print(response)

```

OpenAI Agents SDK is a Python-based toolkit for building intelligent autonomous systems that can reason, plan, and take actions to accomplish complex tasks.

```python

from openai import OpenAI

import json

# Initialize the OpenAI client

client = OpenAI(api_key="your-api-key")

# Define a tool

tools = [

{

"type": "function",

"function": {

"name": "search_weather",

"description": "Get the current weather in a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "The city and state, e.g., San Francisco, CA"

}

},

"required": ["location"]

}

}

}

]

# Function to handle the weather search tool

def search_weather(location):

# In a real application, this would call a weather API

return f"The weather in {location} is currently sunny with a temperature of 72°F."

# Create an agent that uses the tool

messages = [{"role": "user", "content": "What's the weather like in Boston?"}]

response = client.chat.completions.create(

model="gpt-4",

messages=messages,

tools=tools,

tool_choice="auto"

)

# Process the response

response_message = response.choices[0].message

messages.append(response_message)

# Check if the model wants to call a function

if response_message.tool_calls:

# Process each tool call

for tool_call in response_message.tool_calls:

function_name = tool_call.function.name

function_args = json.loads(tool_call.function.arguments)

# Call the function

if function_name == "search_weather":

function_response = search_weather(function_args.get("location"))

# Append the function response to messages

messages.append({

"tool_call_id": tool_call.id,

"role": "tool",

"name": function_name,

"content": function_response

})

# Get a new response from the model

second_response = client.chat.completions.create(

model="gpt-4",

messages=messages

)

print(second_response.choices[0].message.content)

else:

print(response_message.content)

```

Atomic Agents is a lightweight, modular framework for building AI agent pipelines that emphasizes atomicity in AI agent development.

```python

from pydantic import BaseModel, Field

from typing import List

import os

# This is a simplified example based on Atomic Agents' approach

# In a real implementation, you would import from the atomic_agents package

# Define input/output schemas

class ResearchQuery(BaseModel):

topic: str = Field(description="The topic to research")

depth: int = Field(description="The depth of research required (1-5)")

class ResearchResult(BaseModel):

findings: List[str] = Field(description="Key findings from the research")

sources: List[str] = Field(description="Sources of information")

# Define an atomic agent component

class ResearchAgent:

def __init__(self, api_key: str):

self.api_key = api_key

# Initialize any necessary clients or tools

def process(self, input_data: ResearchQuery) -> ResearchResult:

# In a real implementation, this would use an LLM to perform research

print(f"Researching {input_data.topic} at depth {input_data.depth}")

# Simulate research results

findings = [

f"Finding 1 about {input_data.topic}",

f"Finding 2 about {input_data.topic}",

f"Finding 3 about {input_data.topic}"

]

sources = [

"https://github.com/e2b-dev/awesome-ai-agents",

"https://github.com/e2b-dev/awesome-ai-agents"

]

return ResearchResult(findings=findings, sources=sources)

# Usage example

if __name__ == "__main__":

# Create the agent

agent = ResearchAgent(api_key=os.environ.get("OPENAI_API_KEY", "default-key"))

# Create input data

query = ResearchQuery(topic="AI agent frameworks", depth=3)

# Process the query

result = agent.process(query)

# Display results

print("\nResearch Findings:")

for i, finding in enumerate(result.findings, 1):

print(f"{i}. {finding}")

print("\nSources:")

for source in result.sources:

print(f"- {source}")

```

RASA is an open-source machine learning framework specialized in building conversational AI applications, focusing on text and voice-based assistants.

```python

# RASA project structure example

# This would typically be spread across multiple files in a RASA project

# domain.yml - Defines the domain of the assistant

"""

version: "3.1"

intents:

- greet

- goodbye

- ask_about_ai_frameworks

responses:

utter_greet:

- text: "Hello! How can I help you with AI frameworks today?"

utter_goodbye:

- text: "Goodbye! Feel free to ask about AI frameworks anytime."

utter_about_frameworks:

- text: "There are several popular AI agent frameworks including LangChain, AutoGen, CrewAI, and more. Which one would you like to know about?"

entities:

- framework_name

slots:

framework_name:

type: text

mappings:

- type: from_entity

entity: framework_name

"""

# data/nlu.yml - Training data for NLU

"""

version: "3.1"

nlu:

- intent: greet

examples: |

- hey

- hello

- hi

- hello there

- good morning

- intent: goodbye

examples: |

- bye

- goodbye

- see you around

- see you later

- intent: ask_about_ai_frameworks

examples: |

- tell me about AI frameworks

- what are the best AI agent frameworks

- I need information about [LangChain](framework_name)

- How does [AutoGen](framework_name) work?

- Can you explain [CrewAI](framework_name)?

"""

# data/stories.yml - Training data for dialogue management

"""

version: "3.1"

stories:

- story: greet and ask about frameworks

steps:

- intent: greet

- action: utter_greet

- intent: ask_about_ai_frameworks

- action: utter_about_frameworks

- story: ask about specific framework

steps:

- intent: ask_about_ai_frameworks

entities:

- framework_name: "LangChain"

- action: action_framework_info

"""

# actions/actions.py - Custom actions

"""

from typing import Any, Text, Dict, List

from rasa_sdk import Action, Tracker

from rasa_sdk.executor import CollectingDispatcher

class ActionFrameworkInfo(Action):

def name(self) -> Text:

return "action_framework_info"

def run(self, dispatcher: CollectingDispatcher,

tracker: Tracker,

domain: Dict[Text, Any]) -> List[Dict[Text, Any]]:

framework = tracker.get_slot("framework_name")

if framework.lower() == "langchain":

dispatcher.utter_message(text="LangChain is an open-source framework for building applications using large language models.")

elif framework.lower() == "autogen":

dispatcher.utter_message(text="AutoGen is a framework from Microsoft Research that enables the development of LLM applications using multiple agents.")

elif framework.lower() == "crewai":

dispatcher.utter_message(text="CrewAI is a framework for orchestrating role-playing autonomous AI agents.")

else:

dispatcher.utter_message(text=f"I don't have specific information about {framework}, but it might be one of the emerging AI agent frameworks.")

return []

"""

# To train and run a RASA assistant:

# rasa train

# rasa run

```

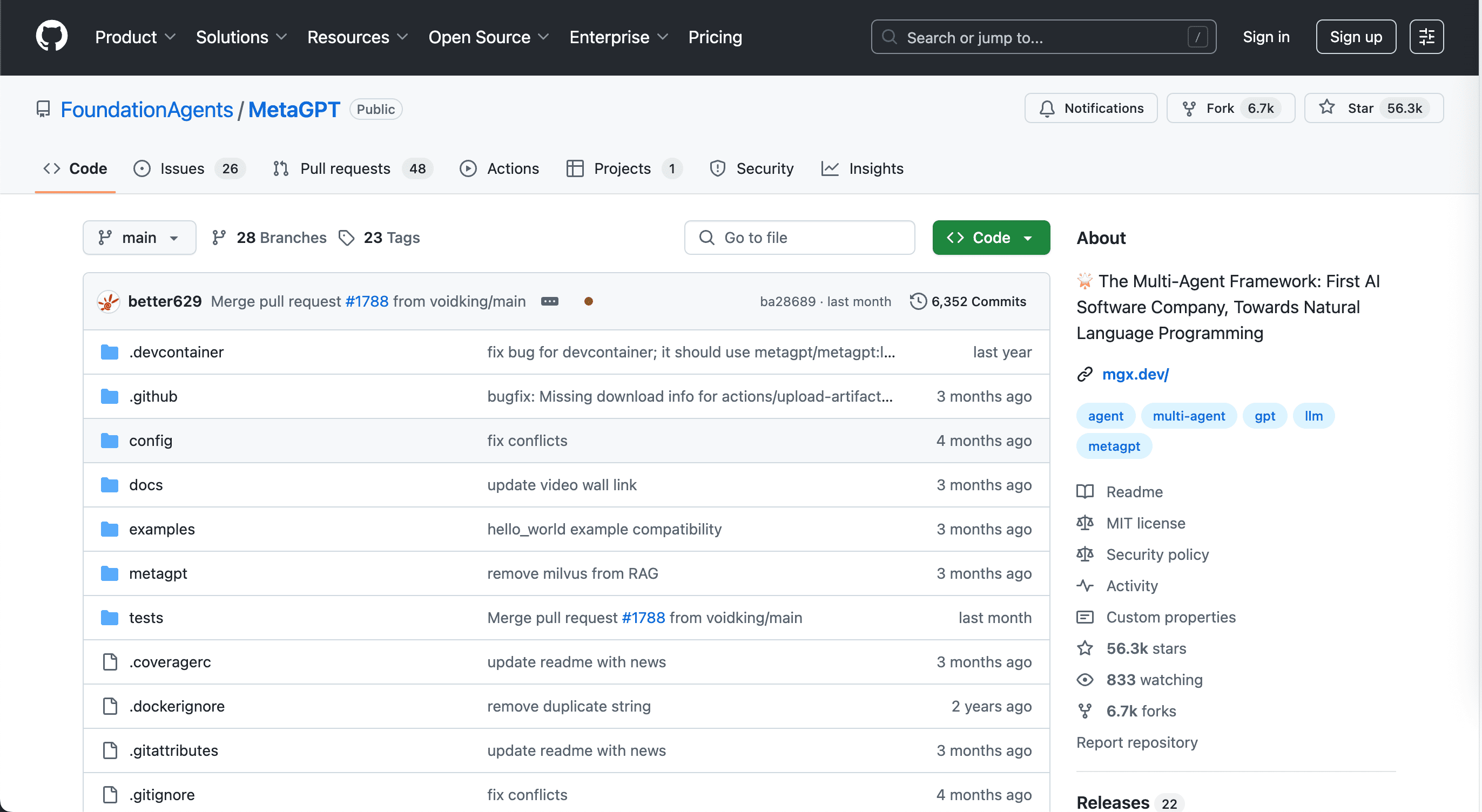

MetaGPT is an open-source multi-agent framework that orchestrates AI agents using LLMs to simulate collaborative problem-solving. Founded by Chenglin Wu, MetaGPT has more than 56K stars on its GitHub repo demonstrating how much developers like this open-source agentic framework for its flexibility and easy-to-use model.

```python

from metagpt.roles import (

ProjectManager,

ProductManager,

Architect,

Engineer

)

from metagpt.team import Team

import asyncio

async def main():

# Define the project requirement

requirement = "Create a web application that allows users to search for and compare AI agent frameworks"

# Create team members with different roles

product_manager = ProductManager()

project_manager = ProjectManager()

architect = Architect()

engineer = Engineer()

# Form a team with these roles

team = Team(

name="AI Framework Explorer Team",

members=[product_manager, project_manager, architect, engineer]

)

# Start the team working on the requirement

await team.run(requirement)

# The team will generate:

# 1. PRD (Product Requirements Document)

# 2. Design documents

# 3. Architecture diagrams

# 4. Implementation code

# 5. Tests

if __name__ == "__main__":

asyncio.run(main())

```

Camel-AI (CAMEL - Communicative Agents for Machine Learning) is an open-source multi-agent framework that enables autonomous agents to collaborate, communicate, and solve complex tasks.

```python

from camel.agents import ChatAgent

from camel.messages import BaseMessage

from camel.typing import ModelType

import asyncio

async def main():

# Create two agents with different roles

user_agent = ChatAgent(

model_type=ModelType.GPT_4,

system_message="You are a user who needs help analyzing data about AI frameworks."

)

assistant_agent = ChatAgent(

model_type=ModelType.GPT_4,

system_message="You are an AI assistant specialized in data analysis and AI frameworks."

)

# Initial message from the user agent

user_message = BaseMessage.make_user_message(

role_name="User",

content="I need to compare different AI agent frameworks for my project. Can you help me analyze their features?"

)

# Start the conversation

assistant_response = await assistant_agent.step(user_message)

print(f"Assistant: {assistant_response.content}\n")

# Continue the conversation

for _ in range(3): # Simulate a few turns of conversation

user_response = await user_agent.step(assistant_response)

print(f"User: {user_response.content}\n")

assistant_response = await assistant_agent.step(user_response)

print(f"Assistant: {assistant_response.content}\n")

if __name__ == "__main__":

asyncio.run(main())

```

When evaluating AI agent frameworks, consider these important factors:

The AI agent landscape continues to evolve with several notable trends:

When selecting an AI agent framework for your project, consider:

The AI agent framework landscape is rapidly evolving, with open-source solutions leading the way in innovation and flexibility. For developers looking to build sophisticated AI applications, these frameworks provide the tools and infrastructure needed to create intelligent, autonomous systems.

Whether you need a framework for building conversational agents, multi-agent collaborative systems, or complex workflow automation, the 11 frameworks covered in this guide offer a range of options to suit different requirements and technical expertise levels.

As AI agent technology continues to advance, staying informed about the capabilities and limitations of these frameworks will be crucial for developers looking to leverage the full potential of AI in their applications.